What is a consolidation algorithm?

Overview

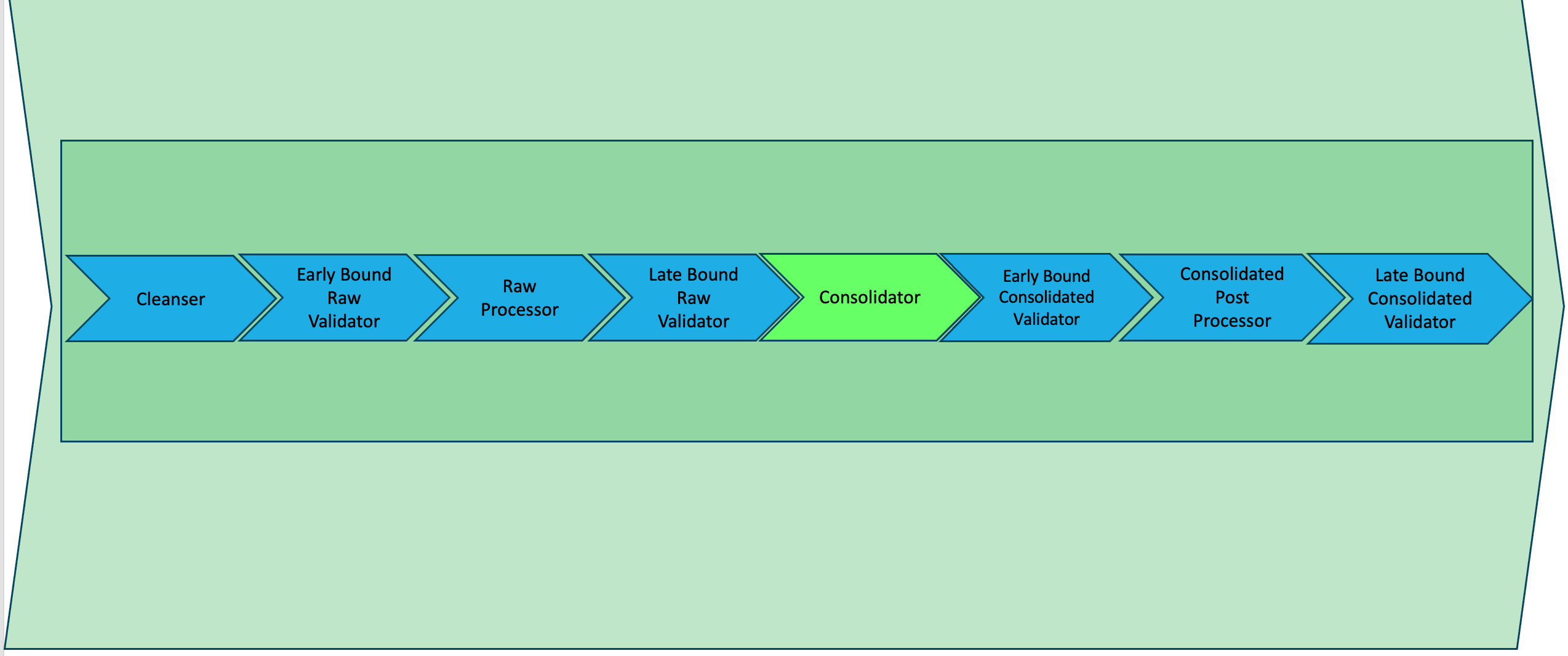

Consolidation algorithms are a type of processor defined within a schema. Their purpose is to consolidate data point values sourced from multiple feeds. If a schema is only associated with one feed then, obviously the value will be unchanged. One example of a consolidation algorithm is when and produces the mean value from 2 integers. Another example is when it selected the most recent value between all the feeds that were processed.

Below is an example of how to set a DECIMAL MAX consolidation algorithm within a schema data point of the numeric type "DECIMAL":

1 2 3 | |

In this case, when the consolidation pipeline phase occurs, each raw data point value associated with the above schema data point would be assessed and the largest decimal value would be set for the data point "COST".

NOTE: anywhere where

Default Consolidation Algorithms

If no consolidation algorithm is specified for a schema data point then depending on the data type of the data point, a different algorithm is selected:

- Data Type = Double -> DOUBLE_MEAN Consolidation Algorithm = DOUBLE MEAN

- Data Type = DECIMAL -> Default Consolidation Algorithm = DECIMAL MEAN

- Data Type = INTEGER -> Default Consolidation Algorithm = INTEGER MEAN

- Data Type = STRING -> Default Consolidation Algorithm = RECENT

- Data Type = BLOB -> Default Consolidation Algorithm = RECENT

- Data Type = JSON -> Default Consolidation Algorithm = RECENT

- Data Type = XML -> Default Consolidation Algorithm = RECENT

Algorithms List

Here are the existing consolidation algorithms: